Every now and then a sysadmin or a developer comes into a situation where he wishes he had not created one big partition or deletes a partition and wishes to grow one file system over the next partition. Besides growing file system, btrfs also supports shrinking in a seemingly simple command. Because the filesystem and partition meddling is always a scary operation, I was wondering if the resize operation actually moves data on btrfs.

This simple test uses blktrace and seekwatcher to trace and visualize how has the file system actually been accessing the underlying block device.

For the test, I'm using kernel 4.9.20 and btrfs-progs 4.7.3.

Preparing test file system

To test this I set up a 2GB disk image and used blktrace on it, to record block device access:

/btrfs-test# dd if=/dev/zero of=btrfs.img bs=1M count=2048 2048+0 records in 2048+0 records out 2147483648 bytes (2.1 GB, 2.0 GiB) copied, 4.6457 s, 462 MB/s

Since blktrace can only operate on a block device (and not a file), we need to setup the image as a loop device:

/btrfs-test# losetup -f btrfs.img /btrfs-test# losetup -a /dev/loop0: [2051]:538617527 (/btrfs-test/btrfs.img)

Let's create a btrfs file system on it:

/btrfs-test# mkfs.btrfs --mixed /dev/loop0

btrfs-progs v4.7.3

See http://btrfs.wiki.kernel.org for more information.

Performing full device TRIM (2.00GiB) ...

Label: (null)

UUID:

Node size: 4096

Sector size: 4096

Filesystem size: 2.00GiB

Block group profiles:

Data+Metadata: single 8.00MiB

System: single 4.00MiB

SSD detected: no

Incompat features: mixed-bg, extref, skinny-metadata

Number of devices: 1

Devices:

ID SIZE PATH

1 2.00GiB /dev/loop0

Test scenario

Assuming the block allocation strategy is to allocate the blocks at the start of the device first, I put up a script that forces the filesystem to write in the second part of the file system, then removing padding files and issuing a resize operation to shrink the file system into half its previous size. This should move the data in latter blocks to first half of device.

This script makes two copies of Ubuntu server image at 829MB each, thus using roughly about 1660MB of (assuming) mostly the first part of disk. These two files serve as padding in order to force the file system to use the second half of disk, where it will (still assuming) place the mini.iso file. After this, the two bigger files are deleted in order to make space on device so the resize operation can actually complete. The script:

#!/bin/bash cp -v ubuntu-16.04.2-server-amd64.iso testmnt/server-iso1.iso cp -v ubuntu-16.04.2-server-amd64.iso testmnt/server-iso2.iso sync sleep 3 cp -v mini.iso testmnt/mini.iso sync sleep 6 rm testmnt/server-iso* sync sleep 3 btrfs filesystem resize 1G testmnt/ sync

In another shell, I'm running blktrace:

/btrfs-test# blktrace -d /dev/loop0 -o trace1 ^C=== loop0 === CPU 0: 19029 events, 892 KiB data CPU 1: 27727 events, 1300 KiB data CPU 2: 28491 events, 1336 KiB data CPU 3: 28879 events, 1354 KiB data Total: 104126 events (dropped 0), 4881 KiB data

The ^C shows I've interrupted it after the test script completed. Blktrace outputs per-cpu data about which blocks were accessed. Seekwatcher takes these files and visualizes them:

/btrfs-test$ seekwatcher -t trace1.blktrace using tracefile ././trace1 saving graph to trace.png

Test results

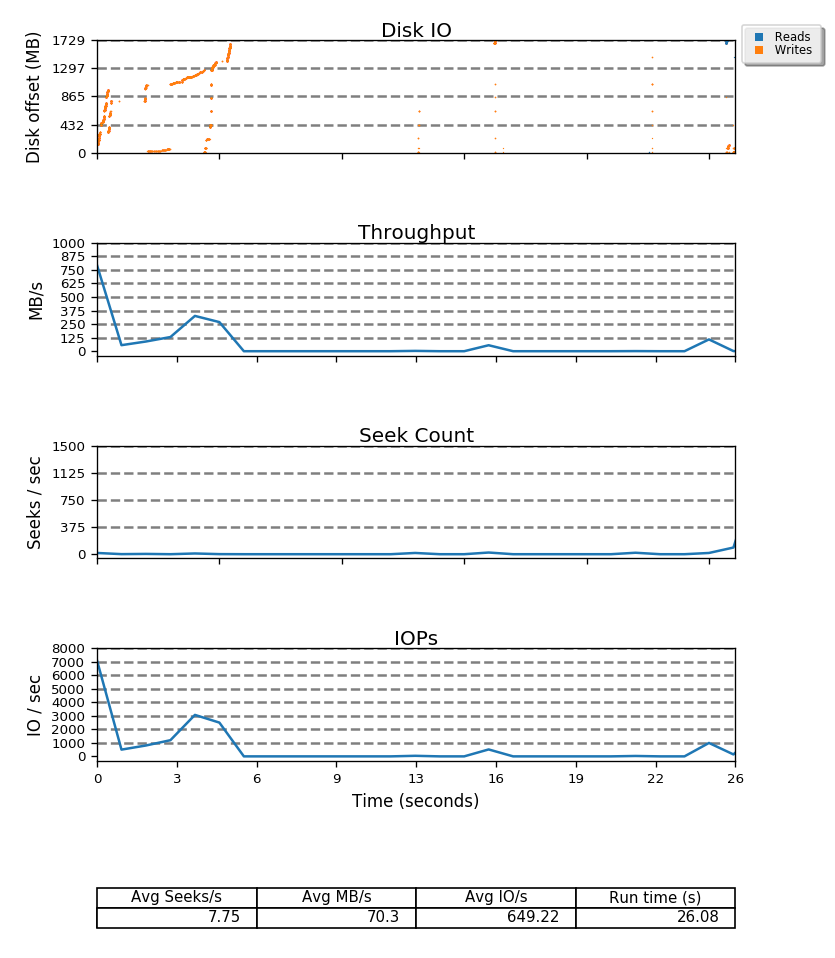

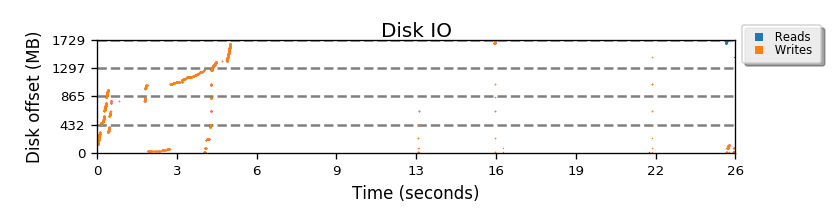

The following graph shows how the at the start of script (first 5 seconds) most of the disk is filled, when the two copies of ubuntu are placed on the drive. Then at 16 seconds, the mini.iso is placed almost at the end of drive. At about 26 seconds the resize operation is done, where the filesystem does actually move the mini.iso to another place on device. Yay!

Full seekwatcher graph

Besides disk offset, seekwatcher also displays throughput, seek count and IOPs, all nicely aligned by time so you can quickly see what is happening with disks when you need to, as seen in the whole graph below.